Guide to SDXL Fine-Tuning with LoRA in Kohya-ss

Praveen

R&D @JarvisLabs.ai

Kohya_ss is a python library that is used to fine-tune Stable Diffusion models. In this blog we will use it's GUI to fine-tune the SDXL base 1.0 model to create an AI avatar of ourself.

Content

I am using the kohya_ss framework in jarvislabs.ai with RTX6000 ada 48GB as my GPU. You can use other GPUS with lower VRAM but you have to choose a lower training batch size, which results in extended training time.

Dataset Preparation

Dataset Preparation is one of the most crucial steps to achieve a good fine-tuned model. For this tutorial I have used 10 1024x1024 images of a woman I generated in Fooocus and swapped faces with other photos. High quality photos usually give better results.

|

|---|

| Examples from the dataset |

You can also find high quality images of celebrities using google advanced image search.

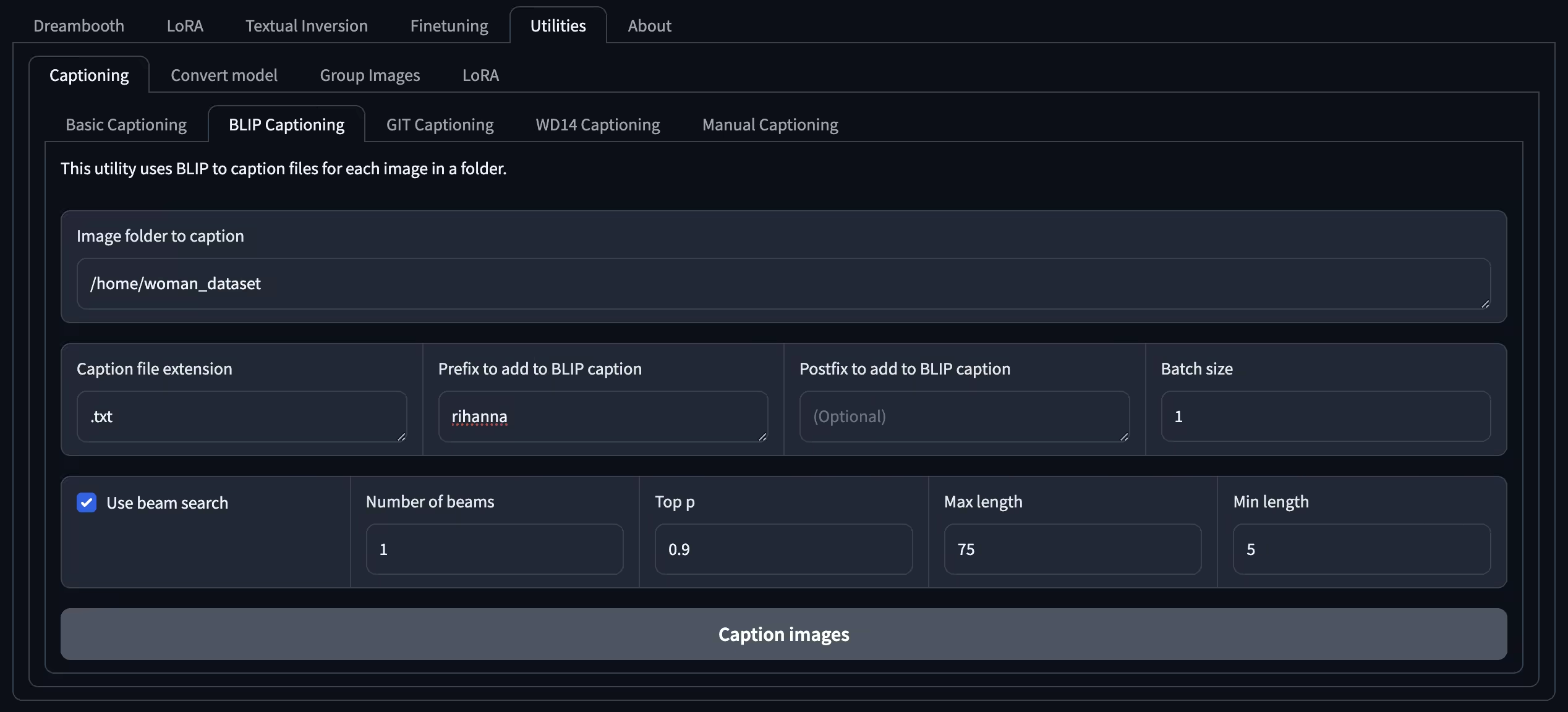

Captioning

Captioning helps the model to understand more about the training dataset images. We can use the blip captioning tool available in kohya_ss to caption our image.

To caption the images just give the path to your training images directory and press the caption images button. I will talk about why I chose rihanna as my prefix in the upcoming section.

After captioning we will be left with a .txt file matching the name of each image file.

|

|---|

| rihanna a woman with a green and black shirt and jeans |

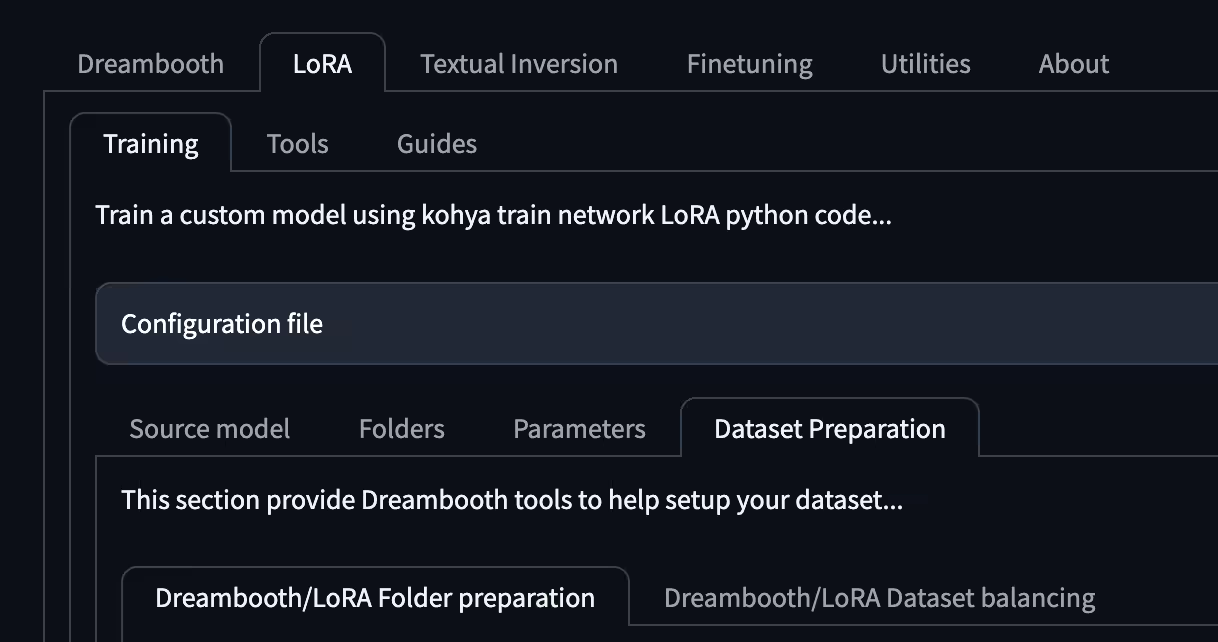

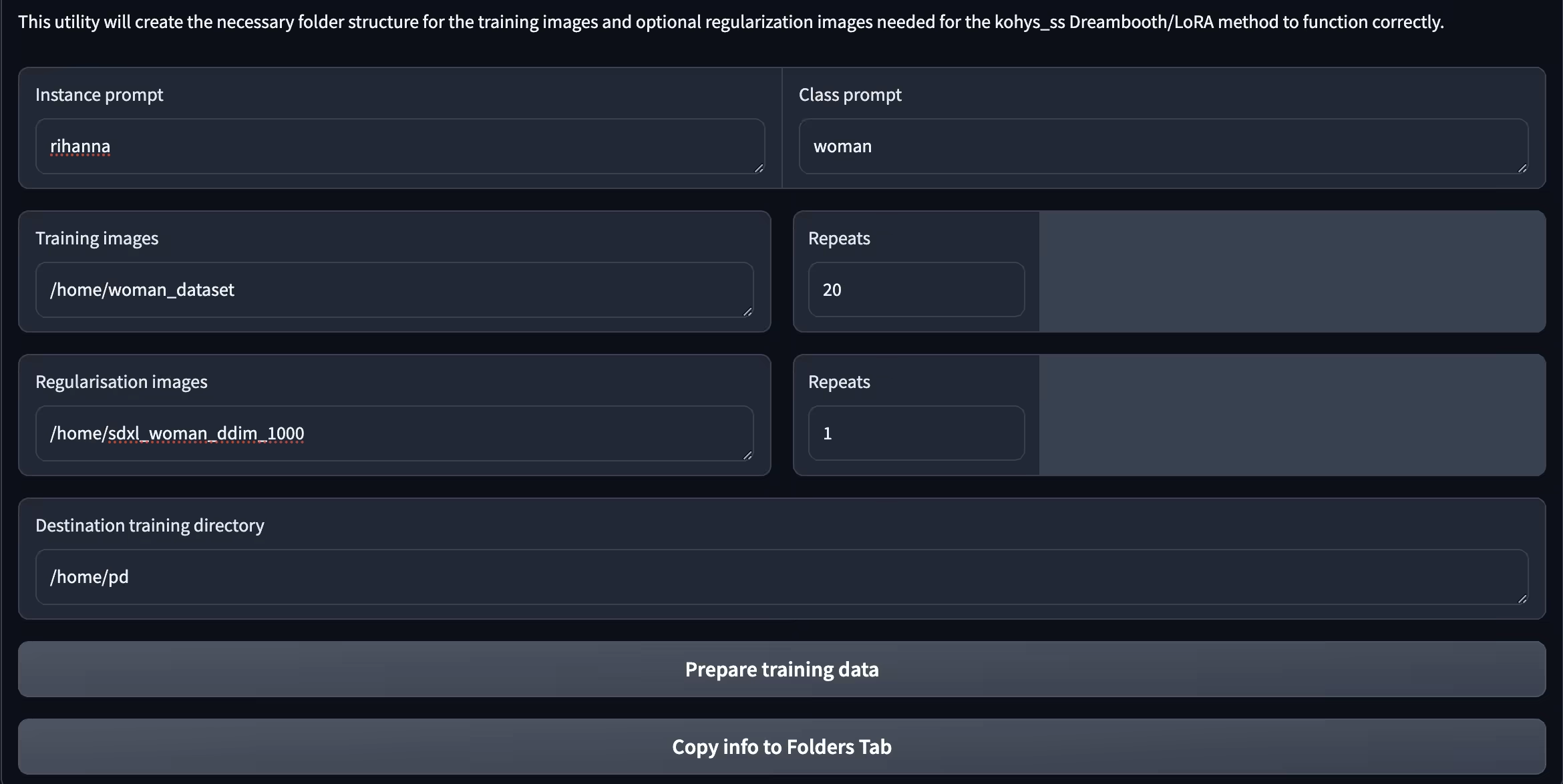

Dataset Format

Navigate to the dataset preparation tab as shown in the above image.

Instance Prompt: This is the prompt that is used to identify your training images. You can use the name of a celebrity that looks similar to the person in our dataset yields better result. Here I used rihanna because she looks similar to the woman in our dataset.

Class prompt: This represents what class our training subject belongs to. In this case I am using the prompt "woman".

Repeats: Repeats indicates how many time each images is used per epoch. There is a relation between repeats, batch size, steps and epochs. You can check it out in this section.

For training images and regularization images you have to specify the path to the directory. Using regularization images prevents overfitting of the model. I am using 1000 1024x1024 images of woman that I found here github.

Click on Prepare training data to start the data preparation.

This will be our final dataset structure.

pd/

├── img/

│ └── 20_rihanna_woman/ #training images

├── log/

├── model/

└── reg/ #regularisation images

Training Parameters

LoRA Type: Standard

Train batch size: 4 (choose a lower batch size if you have lower vram)

Epochs: 20

Mixed Precision & Save Precision: bf16 (Use fp16 for architectures below Ampere)

Learning Rate: 0.0003

Max Resolution: 1024,1024

Enable Buckets: True

Network Rank: 64

Network Alpha: 32

These are the settings that I used to fine-tune my model.

Relation between repeats, batch-size, steps and epochs

As mentioned in the above section, repeats, batch-size, steps and epochs have a relation. Let us breakdown this by using the parameters that I used as an example.

Repeats: 20

Number of Images: 10

Train batch size: 4

Epochs: 20

We will calculate how many total steps does 20 epochs on our dataset gives us.

Steps per Epoch = (Number of Images * Repeats) / Train Batch Size

In our case it is 50. By, multiplying steps per epoch by total epochs. We get total number of steps. So, for our parameters we get a total of 1000 steps. I usually train with 1000 steps for 10 images.

Make sure to play around with the parameters because a good model depends on lots of factors like dataset quality, caption quality, dataset size etc...

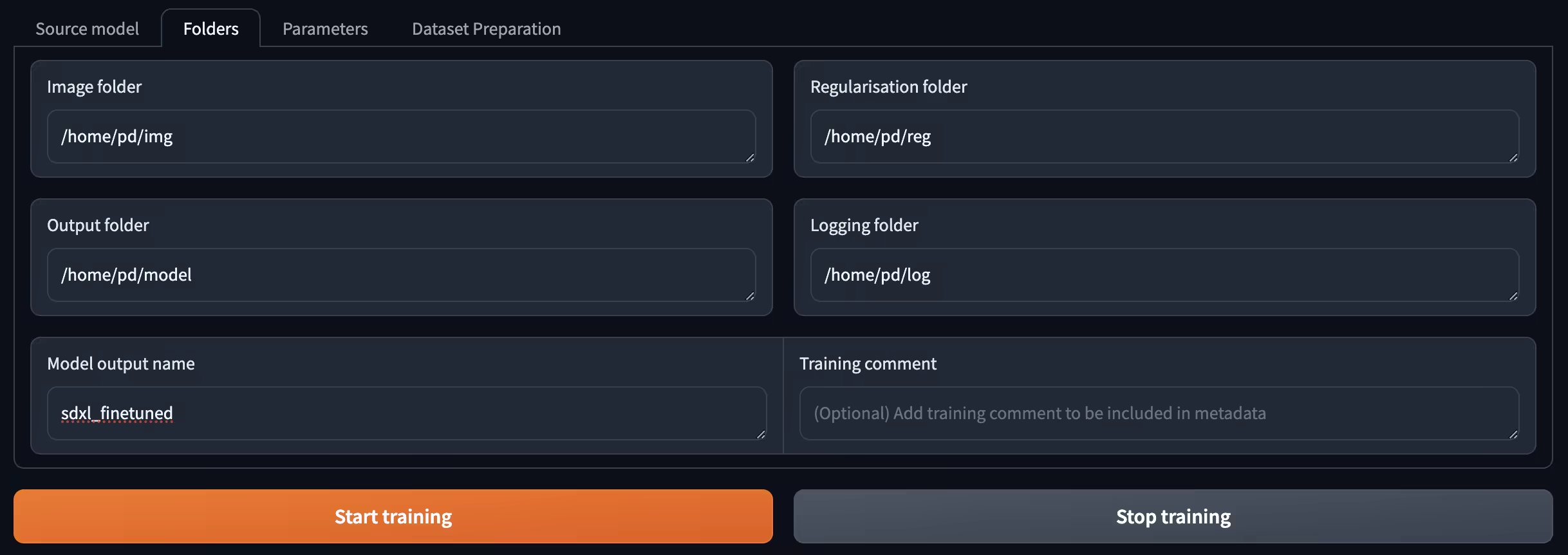

Training

To train your model navigate to the folders tab and paste the respective paths in correct inputs and press Start training.

It took around 35 minutes for the training to get done.

Inference

To generate images with our model, I have uploaded the weights to automatic1111 and used SDXL base 1.0 as the base model and generated some images.

Here are the results...

The settings that I have used

Other Settings

Steps: 50

Sampler: DPM++ 2M Karras

Face Restoration: None

CFG scale: 7

Size: 1024x1024

Wrap up

Fine-tuning your own AI avatar in Stable Diffusion does not have to be complicated. Kohya_ss offers a straightforward way to train an SDXL model with LoRA and create personalized results. Give it a try and see how easy it is to bring your creative visions to life! Thanks for reading 😃.