Apple ML Ferret

Table of Contents

TLDR

Apple ML Ferret is a open source Multimodal large language model (MLLM) developed by apple. It enables spatial understanding by using referring and grounding. By referring and grounding it can recognize and describe any shape in an image, offering precise understanding, especially of smaller image regions.

If you want to know more about Apple ML Ferret in detail, jump here.

Walkthrough

How to launch Apple ML Ferret in jarvisLabs

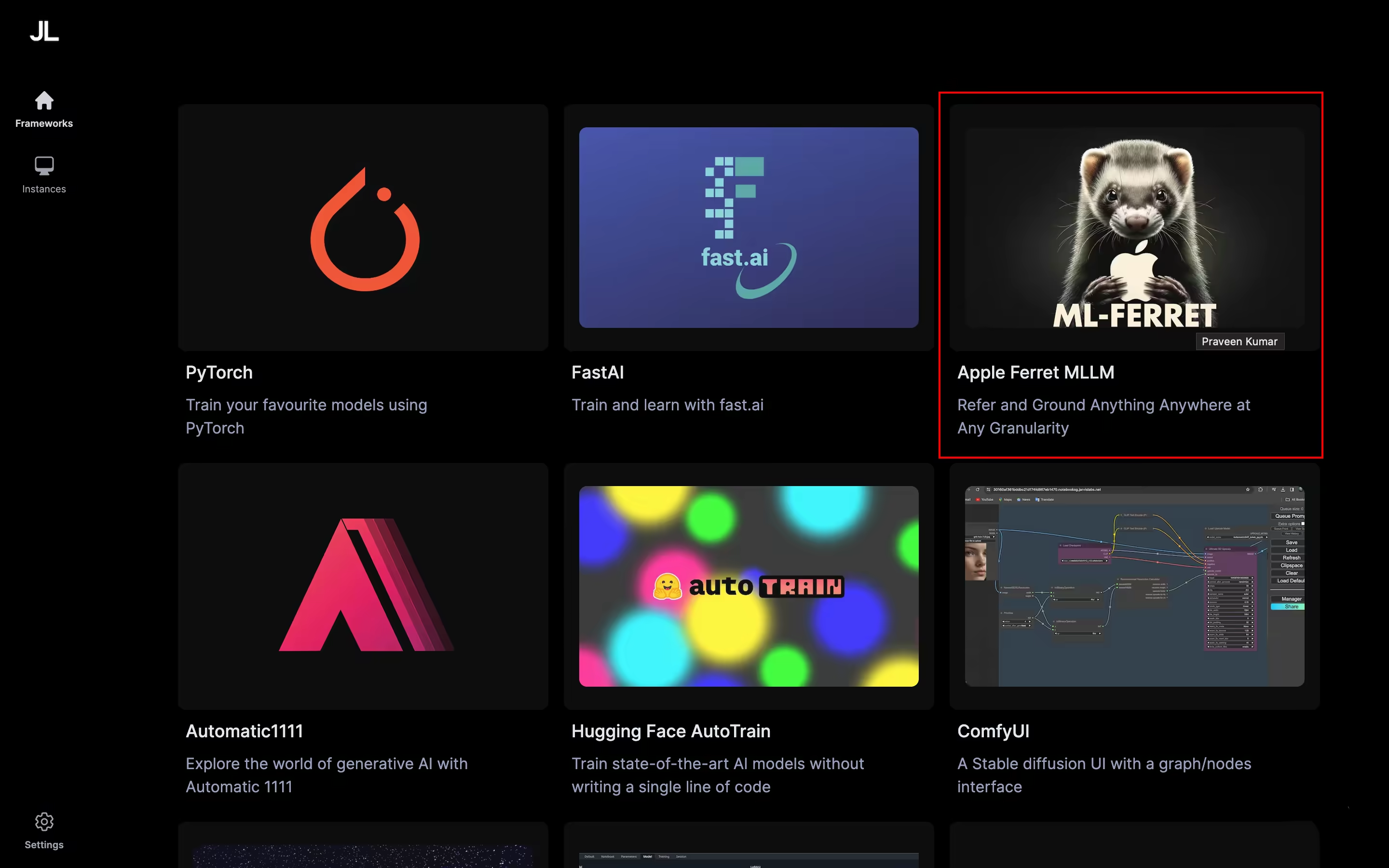

Navigate to the apple's ML Ferret instance on the jarvisLabs' frameworks page. Choose the Apple Ferret MLLM framework.

After you click the framework you will be redirected to the launch page. Check out the details about the launch page here.

Selecting models

In Apple ML Ferret you can see an extra option where you can choose different models that you want to use.

- ferret-7b-v1-3

- ferret-13b-v1-3

Please choose a higher end GPU like RTX6000Ada or A100 when selecting the ferret-13b-v1-3 model.

How to access the gradio dashboard

Then hit the launch button to launch your Apple ML Ferret instance.

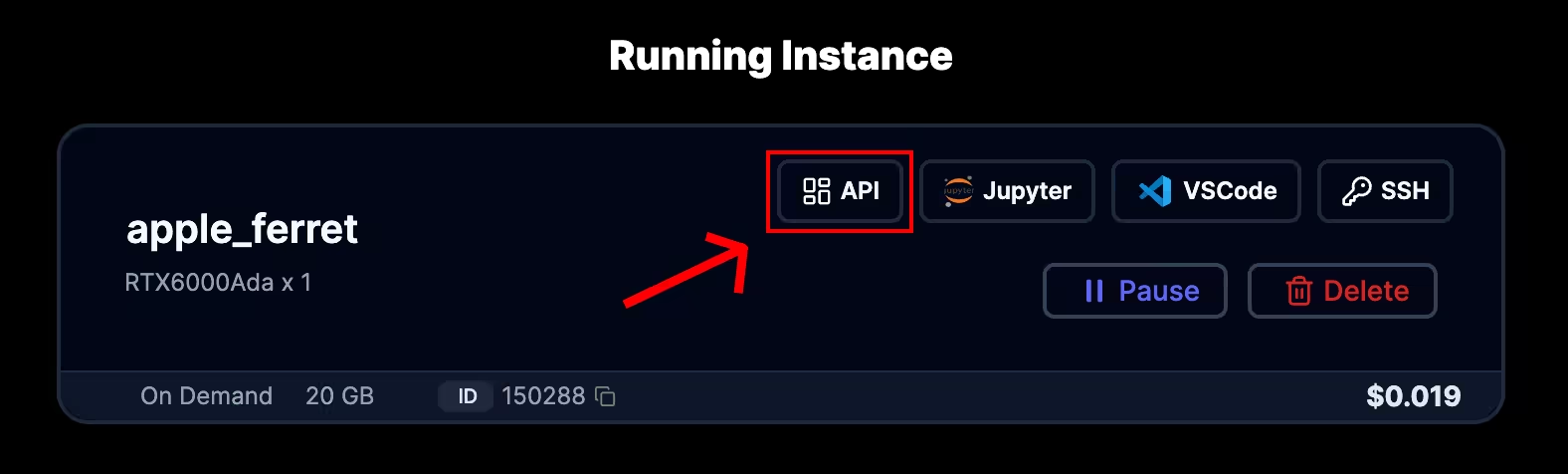

After the instance is successfully launched, you can navigate to your instances page and click the api of the Apple ML Ferret instance.

Please wait for few minutes for the model to download

Demo

Learn how to use Apple ML Ferret and how it works under the hood check out this video on our youtube.

Deep Dive

Apple ML Ferret is a Multimodal Large Language Model that is very much capable of understanding the spatial referring of any shape and accurately grounding open-vocabulary descriptions. It means that Apple ML Ferret is really good at identifying things and shapes in a picture and describe them using simple words.

Referring

Referring in Apple ML Ferret has two main aspects:

- Spatial referring

- Open-Vocabulary Referring

Spatial referring

Apple ML Ferret has the ability to analyze a picture and tell you where a specific object or thing is present in the image or to be more exact it tells you the co-ordinates of the object in the picture.

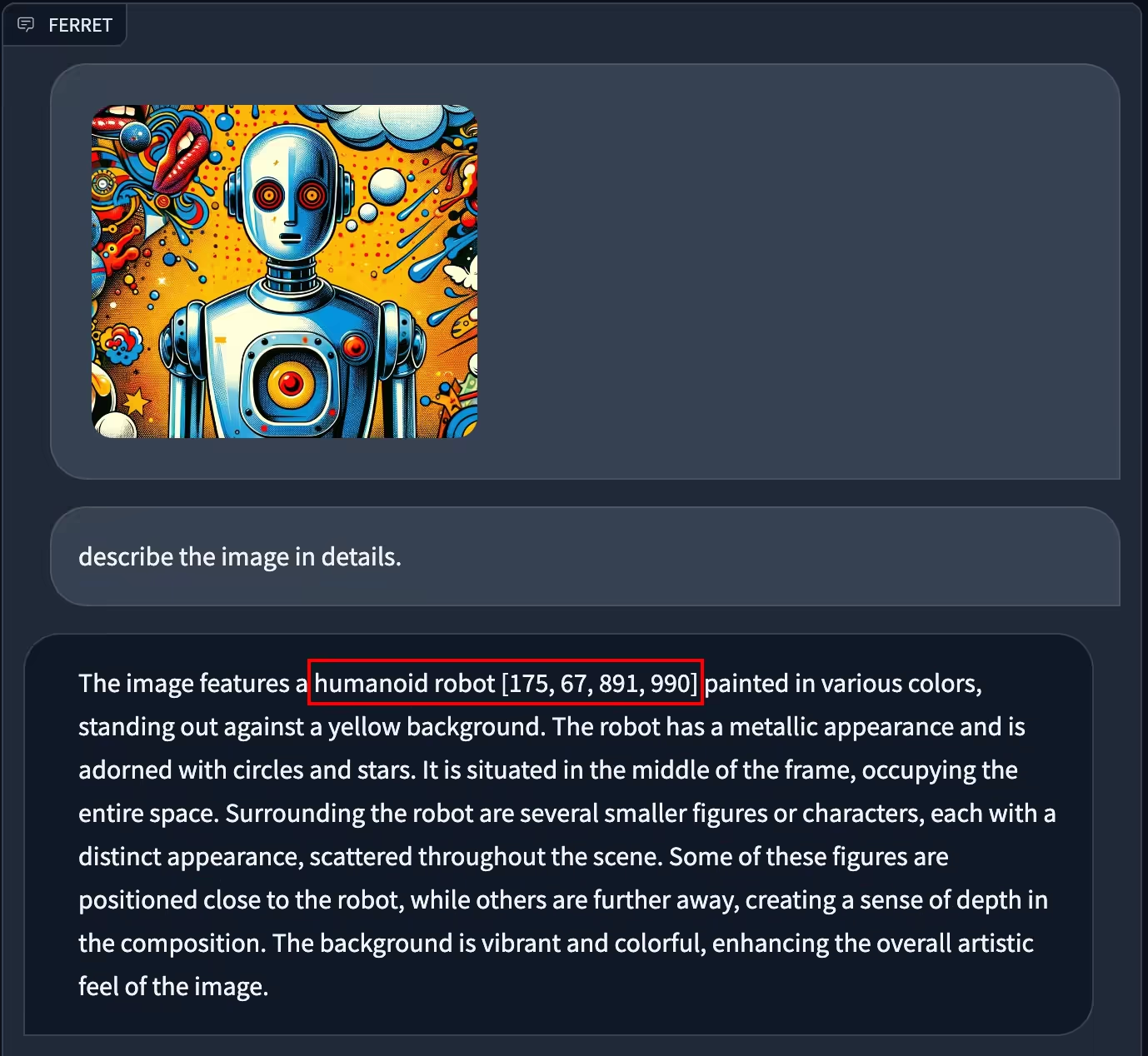

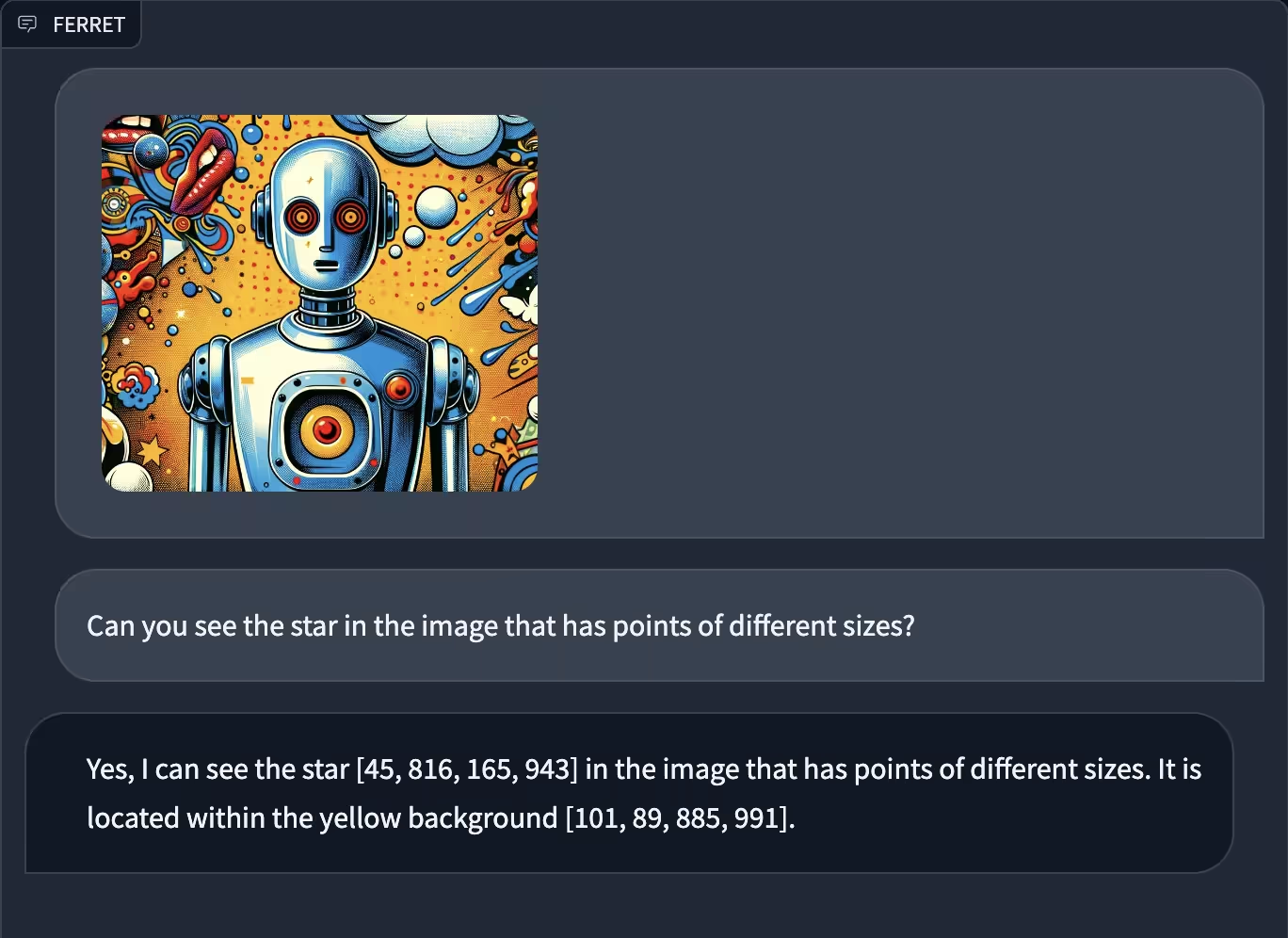

Now let's look at an example for spatial referring.

In the above example we can see that the Apple ML Ferret has not only understood what object/things are in the image but also where the humanoid robot is present in the image. After the output is generated completely, we can press the show location button to see how the Apple ML Ferret model has mapped the object(humanoid robot).

We can see the red square around the robot our Apple ML Ferret model has highlighted after mapping us the co-ordinates.

Open-Vocabulary referring

Open-Vocabulary referring is the model's ability to understand and process a wide and flexible range of words and phrases when referring to objects or elements within an image. This means that the Apple ML Ferret will understand however you explain an object to it and it will understand what object you're referring to in the image and map it with co-ordinates it for you.

Example:

In this image we can see that I have asked for a "star that has points of different sizes" and the MLLM was able to correctly guess the where the star is present in the image.

Grounding

Grounding is the ability of the model(Apple ML Ferret) to map or link textual descriptions to the corresponding parts or objects in an image. It is also know as Fine-grained grounding.

When Apple ML Ferret is grounding something it is not just understanding the text input and recognizing the object in the image. Instead, it's actively linking which parts of the image correspond to which parts of the textual description.

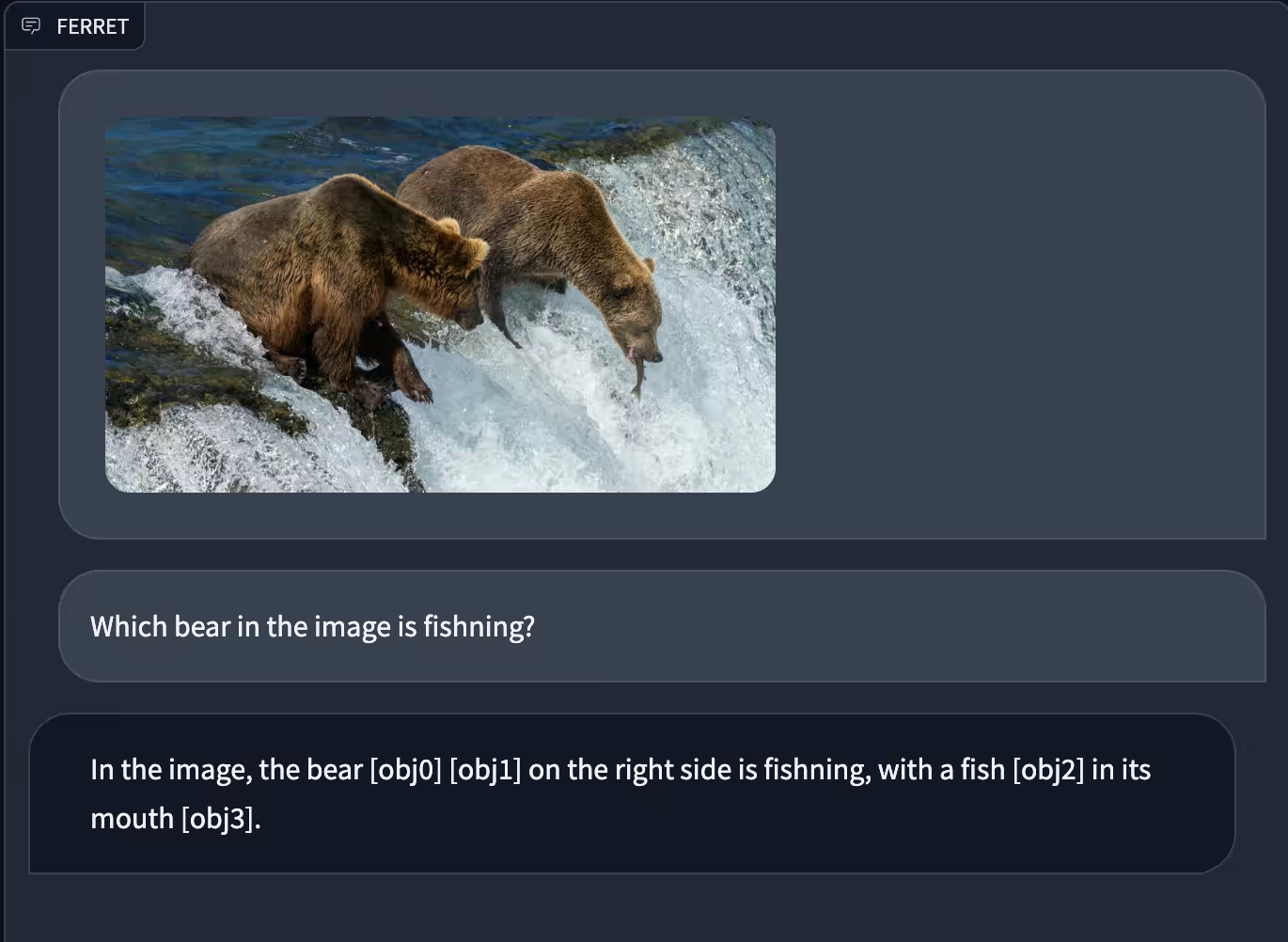

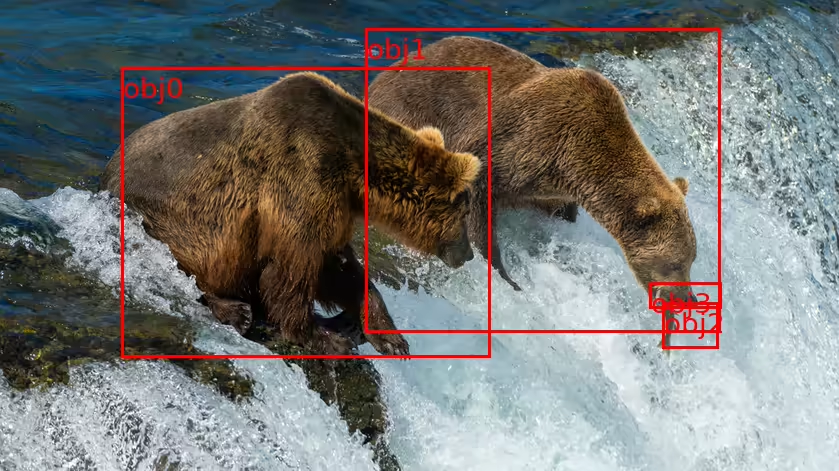

In the above picture we can see that I asked the Model which one of the two bears are fishing and it was able to correctly identify that the bear on the right is fishing.

It was even able to map the fish and the mouth of the bear that was fishing!

Enhanced interaction techniques

Apple ML Ferret has different interaction features for the enhancement of the interaction with the image.

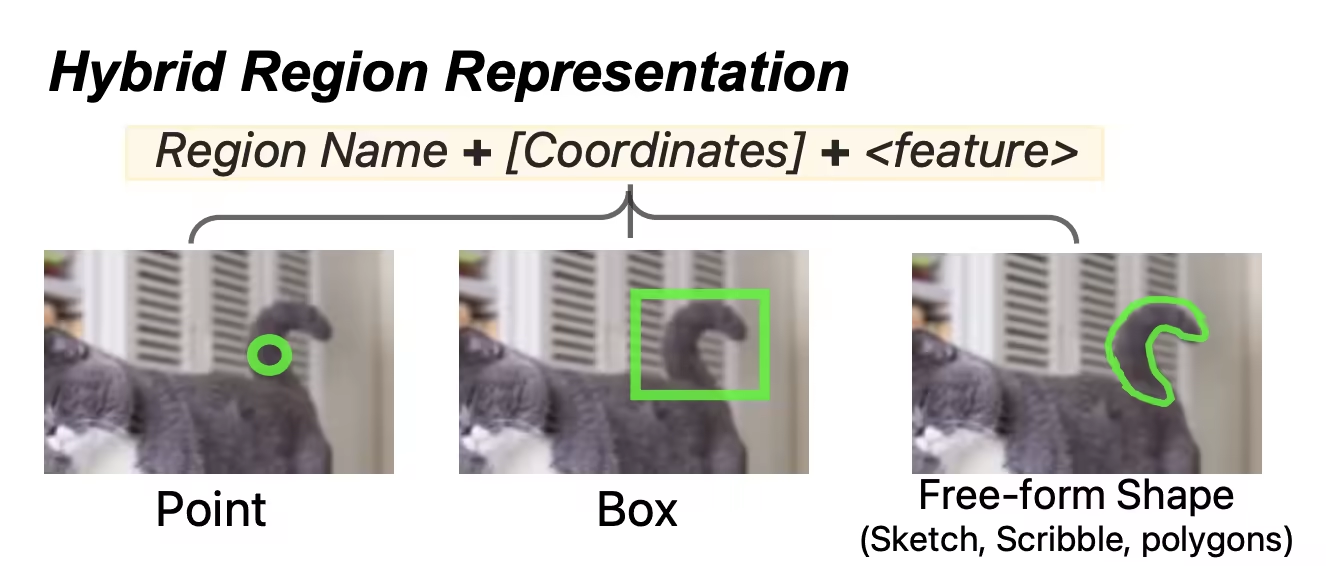

There are three types of interaction:

Point: This feature allows users to refer to a specific point in an image. By selecting a point, Ferret can understand and respond to queries related to that particular spot in the picture.

Box: With the Box feature, users can draw a box around an area in an image. This helps Ferret in focusing on and analyzing the content within that boxed region, enabling it to provide more specific responses based on the selected area.

Scribble: The Scribble feature enables users to freely draw or scribble over an image. This allows for more granular interaction, as Ferret can interpret and respond to queries about the scribbled area, offering detailed insights based on the user's input on the image.

Comprehensive Dataset - GRIT

The GRIT(Ground-and-Refer Instruction-Tuning) dataset is a dataset that has been curated for the specific motive to improve the Spatial referring and Open-grained grounding capabilities of the Apple ML Ferret. It includes approximately 1.1 million samples and features rich spatial knowledge. The dataset is designed to be challenging, with 95,000 hard negative samples to ensure robustness and efficiency in the model's ability to understand and ground detailed, open-vocabulary descriptions within images.

Data

We don't have any specific information on the contents of the dataset but typically, a dataset like GRIT(Ground-and-Refer Instruction-Tuning) would contain paris of images and textual descriptions. Each pair would be of a highly detailed and descriptive sentence referring to the specific objects or actions in the image, along with the image itself. The descriptions would be tied to the picture like a puzzle, making Apple ML Ferret work hard to figure out how to ground the descriptions with the objects/things in the image that corresponds to it.

Applications and use cases

The advanced grounding and referring capabilities of the Apple ML Ferret is ideal for many things like

- Visual Search Engines: Enhancing image-based queries with precise text-image matching.

- Automated Image Captioning: Generating detailed, context-aware captions for images.

- Assistance for Visually Impaired: Providing descriptive audio narratives of visual scenes.

And many more.