Axolotl

Table of Contents

TLDR

Axolotl is a tool designed for fine-tuning various AI models, providing support for customized configurations and architectures. It can be easily configured using a simple yaml file or CLI overwrite. It supports fullfinetune, lora, qlora, relora, and gptq. It supports more than 25 dataset formats like alpaca, sharegpt, llama-2.

If you want to know more about Axolotl in detail, jump here.

Walkthrough

How to launch Axolotl in jarvisLabs

Navigate to the axolotl instance on the jarvisLabs' frameworks page.

After you click the framework you will be redirected to the launch page. Check out the details about the launch page here.

How to train your model

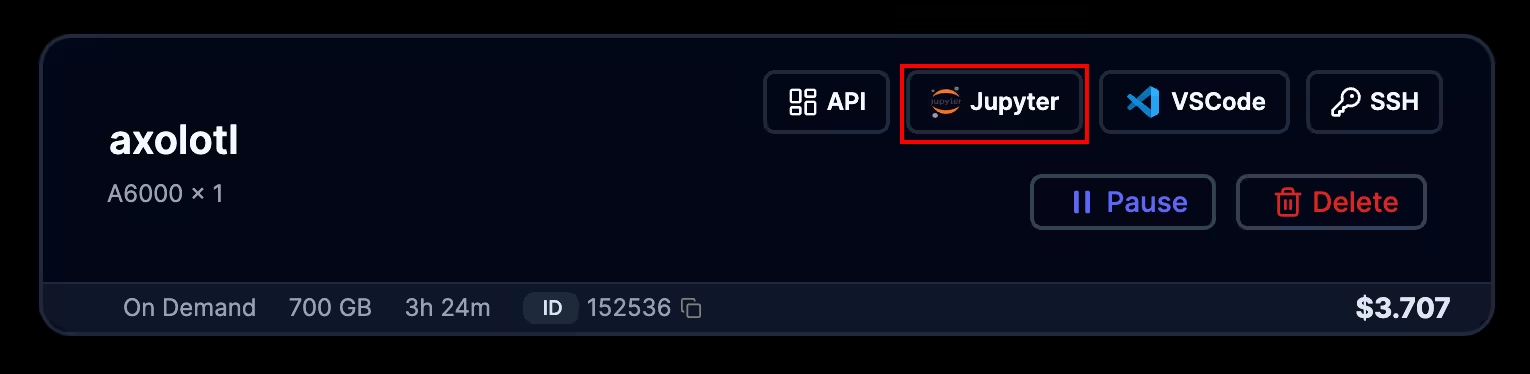

After the instance is successfully launched, you can navigate to your instances page and click the JupyterLabs of the axolotl instance.

Then use the following command.

cd /workspace/axolotl

To fine-tune a model enter the following command

accelerate launch -m axolotl.cli.train path/to/your/file_name.yml

In axolotl users can use the pre-configured presets in the examples folder. These ready-to-use examples simplify the process, allowing users to easily adapt and optimize various models.

To prompt your model using gradio

accelerate launch -m axolotl.cli.inference path/to/your/file_name.yml --lora_model_dir="output/directory/of/model" --gradio

To inference your model

accelerate launch -m axolotl.cli.inference path/to/your/file_name.yml --lora_model_dir="output/directory/of/model"

Deep Dive

Axolotl is a versatile tool designed for fine-tuning various AI models, supporting a wide range of architectures with custom dataset format allowing users to work with different data source and offering user-friendly configurations. It also incorporates advanced techniques such as PEFT (Parameter Efficient Fine-tuning Techniques), enhancing its effectiveness and efficiency in model optimization. Axolotl facilitates working with single and Multiple GPUs via FSDP or DeepSpeed.

Supported Architecture

Axolotl supports a wide variety of models and fine-tuning methods. Check them out below.

| fp16/fp32 | lora | qlora | gptq | gptq w/flash attn | flash attn | xformers attn | |

|---|---|---|---|---|---|---|---|

| llama | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Mistral | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Mixtral-MoE | ✅ | ✅ | ✅ | ❓ | ❓ | ❓ | ❓ |

| Pythia | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ | ❓ |

| cerebras | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ | ❓ |

| btlm | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ | ❓ |

| mpt | ✅ | ❌ | ❓ | ❌ | ❌ | ❌ | ❓ |

| falcon | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ | ❓ |

| gpt-j | ✅ | ✅ | ✅ | ❌ | ❌ | ❓ | ❓ |

| XGen | ✅ | ❓ | ✅ | ❓ | ❓ | ❓ | ✅ |

| phi | ✅ | ✅ | ✅ | ❓ | ❓ | ❓ | ❓ |

| RWKV | ✅ | ❓ | ❓ | ❓ | ❓ | ❓ | ❓ |

| Qwen | ✅ | ✅ | ✅ | ❓ | ❓ | ❓ | ❓ |

Datasets

Axolotl supports a wide variety of datasets that you can fine-tune your model on.

Alpaca

Want your model to adapt to different instructional guidance to craft a story in any format? Use alpaca.

alpaca: instruction; input(optional)

{ "instruction": "...", "input": "...", "output": "..." }

Sharegpt

Have dynamic conversations with your model using sharegpt datasets.

sharegpt: conversations where from is human/gpt. (optional: system to override default system prompt)

{ "conversations": [{ "from": "...", "value": "..." }] }

llama-2

Use llama-2, which is similar to sharegpt but with unique configuration.

llama-2: the json is the same format as sharegpt above. yml datasets: - path: <your-path> type: sharegpt conversation: llama-2

Raw Corpus

For unaltered and raw data format use completion

completion: raw corpus

{ "text": "..." }

Fine-tuning Methods

Axolotl supports a wide range of fine-tuning methods. They are:

Fullfinetune

LoRA

QLoRA

GPTQ

ReLoRA

FullFinetuning

FullFinetuning is the process where all the parameters of the model is fine-tuned. This process usually takes a long time and is GPU intensive. Although FullFinetuning takes a long time, all the weights of the model get fully adapted to the given dataset which may result in high performance. It also has a higher chance of over fitting.

To FullFinetune a model, you can just leave the adapter configuration empty in your configuration yml.

adapter:

LoRA

LoRA is technique which is used for memory efficient fine-tuning. While fine-tuning a modal with LoRA will reduce number of parameters that we fine-tune in any of the attention layer we choose(q_proj, v_proj, k_proj etc). When we train on a lower rank of the initial weight matrices the it will drastically reduce the memory requirement.

To fine-tune a model using LoRA in axolotl, you have to modify the adapter setting in your configuration yml file to lora.

adapter: lora

You can see that the adapter option is set to lora in the above yml file configuration.

QLoRA

Qlora is also adapts the same low rank adaption method as LoRA. But it also uses a process called Quantization. Quantization reduces the precision value of the parameter to a lower precision. This results in increase of efficiency and faster computation.

To fine-tune a model using qLoRA in axolotl, you have to modify the adapter setting in your configuration yml file to qlora.

adapter: qlora

GPTQ

GPTQ (Quantized Training of GPT models) is a technique that combines the principles of quantization with the training of generative pre-trained transformer(GPT) models. Quantization involves reducing the precision of the model's parameters, which leads to a more compact model size and faster inference times, making it especially beneficial for deployment in resource-constrained environments.

base_model: #Choose any GPTQ model

gptq: true

Configuration parameters

In this section we will go through the important yml configurations that you need to know when fine tuning your model in axolotl.

| Parameter | Description | Data Type |

|---|---|---|

| Base model | Path or name of the pre-trained model to be fine-tuned. | String |

| Model type | Specifies the type of language model used in fine-tuning. | String |

| Tokenizer type | Specifies the type of tokenizer to split the text in the dataset. Custom tokenizer can also be provided. | String |

| Load in 4 bits | Loading parameter with 4 bits reduces the precision of parameters, leading to lower model performance and memory usage. | Boolean |

| Load in 8 bits | Loading parameters with 8 bits offers higher precision compared to 4 bits but may increase memory usage. | Boolean |

| Bfloat16 | A floating-point format using 16 bits, offering higher precision than 8-bit or 4-bit loading. | Boolean |

| Fp16 | Half-precision floating point format using 16 bits. Offers higher precision than Bfloat16 but lower than Fp32. | Boolean |

| tf32 | TensorFlow 32, introduced by NVIDIA, uses 32 bits like Fp32 but with precision between Fp16 and Fp32. Recommended for NVIDIA GPUs. | Boolean |

| SequenceLength | Determines the maximum length of input sequences for processing the model during fine-tuning. | Integer |

| Sample Packaging | Specifies how input data is organized into batches. | String |

| BathSize | Number of input data processed together in each training iteration. | Integer |

| Padding | Checks whether the sequence in each batch has the same length. Adds 0 for sequences with varying lengths if set to true. | Boolean |

| Shuffling | Whether to shuffle the order of input samples between epochs or batches. | Boolean |

| Adapter | Specifies the type of adapter architecture used in fine-tuning, such as LoRA, QLoRA, GPTQ. | String |

| Lora_r | Decides the number of simpler steps applied to the model's computations during fine-tuning. Recommended to match the number of hidden layers. | Integer |

| Lora_alpha | Controls the scaling factor while fine-tuning the model. A higher value means updating matrices have a stronger impact. | Integer |

| lora_dropout | Controls the dropout rate during the LoRA fine-tuning process to balance between preventing over-fitting and effective learning. | Float |

| Output_dir | Specifies the directory where the output of the training process will be saved, including trained model weights, metrics, logs, etc. | String |

| Num_epochs | Number of times the model will be trained using the entire dataset. | Integer |

| Gradient_accumulation | Technique to fit large batches of data into memory by accumulating gradients over several iterations before updating weights. | Integer |

| Micro_batch_size | Divides the entire batch of training data into smaller subsets for efficient memory management. | Integer |

| Learning rate | Determines the speed at which the model learns, aiming for the best outcome without going too fast or too slow. | Float |

| Train_on_inputs | Determines if the model is trained only on the provided input data or has extra information during training. | Boolean |

| Eval_batch_size | Determines how many example data are processed in parallel during the evaluation phase. | Integer |

| Eval_steps | Indicates how frequently the model checks its performance on the validation dataset during training. | Integer |

| Optimizer | Algorithm used by the model to adjust its parameters during training based on the calculated gradients. | String |

| Warmup_steps | Number of steps where the learning rate gradually increases from a low initial value to the set learning rate. # cannot use with warmup_ratio | Integer |

| Warmup_ratio | Proportion of total training epochs or steps during which the learning rate is gradually increased from a low initial value. # cannot use with warmup_steps | Float |

Weights and Biases Logging

You can also use weights and biases to monitor your training process. To monitor your process follow the steps below.

Login to wandb

Login to your wandb account

wandb login

After that you have to add the following wandb parameters to your config yml file.

wandb_project: #project name

wandb_entity: # Replace with your W&B username or team name

wandb_watch: True # Optional: set to True if you want to log gradients and parameters

wandb_name: # A descriptive name for this specific run

wandb_log_model: True # Set to True if you want to log the model to W&B

And now you navigate to your weights and biases profile and monitor the training process.